For achieving better ranks in search engine result pages, it is important that the pages of your website get indexed properly by the search engine bots. Search engine optimization is a technique aims to optimize each page of the website so that they get adequate importance from the search engines. However, sometimes indexing all pages is not desirable. In fact, indexing some of the pages in the website can be outright problematic and detrimental to the business interests of the company.

As an example, take the following situations:

• You are developing a new website on the hosting servers and you do not want the site to be indexed by the search engines build the development is complete.

• You want to capture leads from your website and have installed a lead capture form. Users who fill up the lead capture form will be guided to a page which gives them a reward. This final page containing the reward needs to be blocked from being crawled by the search engines. Otherwise, if it is shown in the search results, then anyone can take the reward without filling up their details, resulting in no leads being captured.

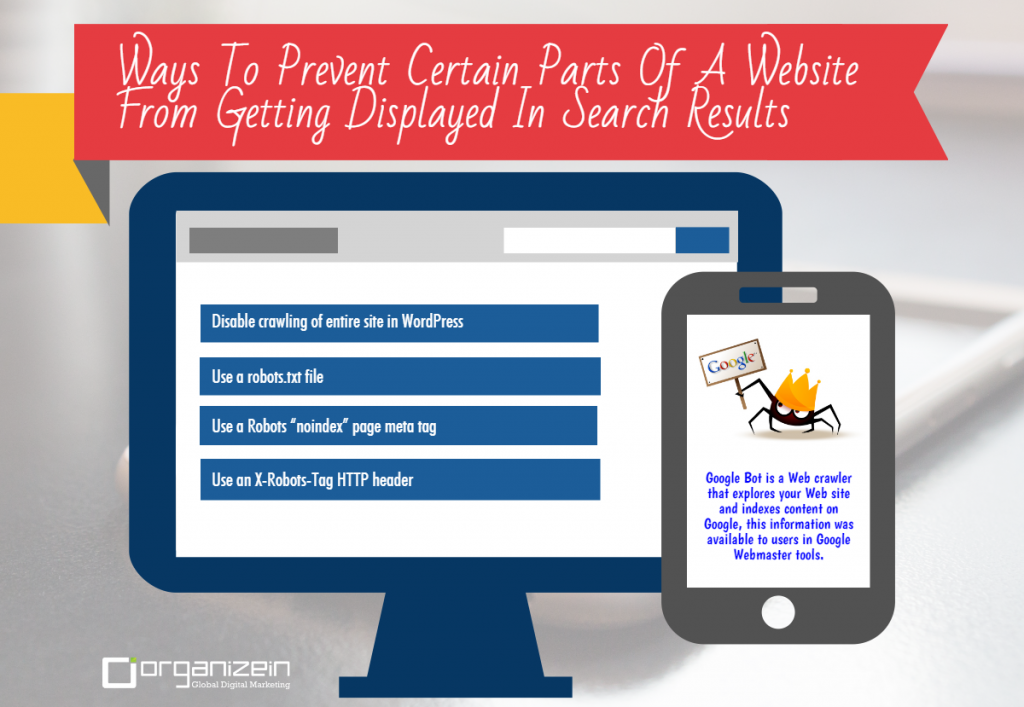

So let us see how we can restrict the site or some pages from being crawled by search engines.

• Disable crawling of entire site in WordPress

WordPress makes blocking an entire website very convenient. Log in to your WordPress backend, and navigate to settings-> Reading page. Then scroll down to Search Engine Visibility and enable the option “Discourage search engines from indexing this site”. This will block the entire site from being indexed by search engines. 8 Powerful Ideas To Increase The Click Through Rates Of Your Website

• Use a robots.txt file

Adding a robots.txt file in the root folder of your web hosting server allows you to control which elements of your site the bots can index. The benefit of using this method is that you can block a few or all pages, certain directories or some media in your website. Be careful to create and modify robots.txt files properly, otherwise the bots will have problems in crawling the entire site.

• Use a Robots “noindex” page meta tag

Robots page meta tags allow you to exclude certain pages on the site from getting indexed. Place the following tag within the head section of the page you want to exclude from the getting indexed:

If at a later stage you want the pages to get indexed, you will just have to remove the tag. The disadvantage of this tag is that you will have to add it individually to every page that you want to block from the bots.

• Use an X-Robots-Tag HTTP header

You can add the X-Robots-Tag HTTP header tag to the HTML responses of any URL. This will tell Google not to display the site in the search results. This is done by adding the following tag to the .htaccess file: